To acquire data for my project mentioned here, I wanted to scrape the comments section of a norwegian newspaper.

Initially I thought it would be as simple as getting the source and applying whatever regular expressions that would give me what I wanted. Sadly it was not that simple.

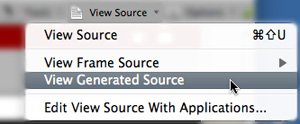

My chosen norwegian newspaper use Disqus as comment system. Disqus uses javascript quite heavily to generate HTML, this means that the comments are not part of the original source but just exist in the browser DOM. This problem can be solved by using “view generated source” in firefox developer plugin, or by making a plugin for a browser and manipulate the DOM directly. For my problem viewing the generated source was the simpler answer. If I wanted to scrape Disqus often I would make a plugin to dump the generated source.

Sadly this was not the only problem with scraping Disqus. Disqus is configured to only shows a small amount of comments at a time, and to show more a javascript routine must be invoked several times. I just did it a lot of times manually, though again if I really wanted to scrape Disqus based systems a lot, I would automate this step.

Once the I got the data I load it to a java routine which remove unwanted data based on regular expressions (it is faster to access the DOM, I would do that if I had lots of data). Then I generate descriptive parameters (word count, punctuation amount , etc) based on post content. The resulting parameters are stored as JSON. Then the resulting JSON is used as basis for the scatterplots here.